Environmental Perception

How a vehicle senses the environmentPrior to perform any action, a vehicle has to be aware of its environment. As humans, while driving, we mostly rely on our visual sense. Therefore, its evident to provide a vehicle with multiple cameras, e.g., to perform lane detection.

Is this enough to drive autonomously?

In some scenarios, visual perception come to its limits: extreme weather conditions such as fog or heavy rain,

certain light conditions such as direct sunlight or night, and occlusion restrict our visual representation of the

environment. Also human drivers use other senses to evaluate certain situations: e.g., the emergency vehicle is often detected by means of noise

prior than by our eyes. In addition to cameras, mainly, radars, lidars, and ultrasonic sensors are used. GPS sensors are in use for positioning.

Data from sensors differ, e.g., in quality. Thus, in a first step, redundant sensors are needed. Afterwards, to get a consistent view,

sensor fusion is essential: it allows to combine strengths of sensors to compensate the weaknesses of other sensors.

📡 This is what the @ousterlidar sensors saw during a lap of our Zala Zone track 💻 pic.twitter.com/cKcBDAIjaD

— Roborace (@roborace) 27. August 2019

Localization without GPS

Localizing a vehicle while driving is also possible without GPS sensors. This has been proven using a 360° Lidar sensor while driving autonomously with more than 90 kph and reaching lateral forces above 1 g. In the video the recorded sensor data is shown in the bird's eye and third person view.

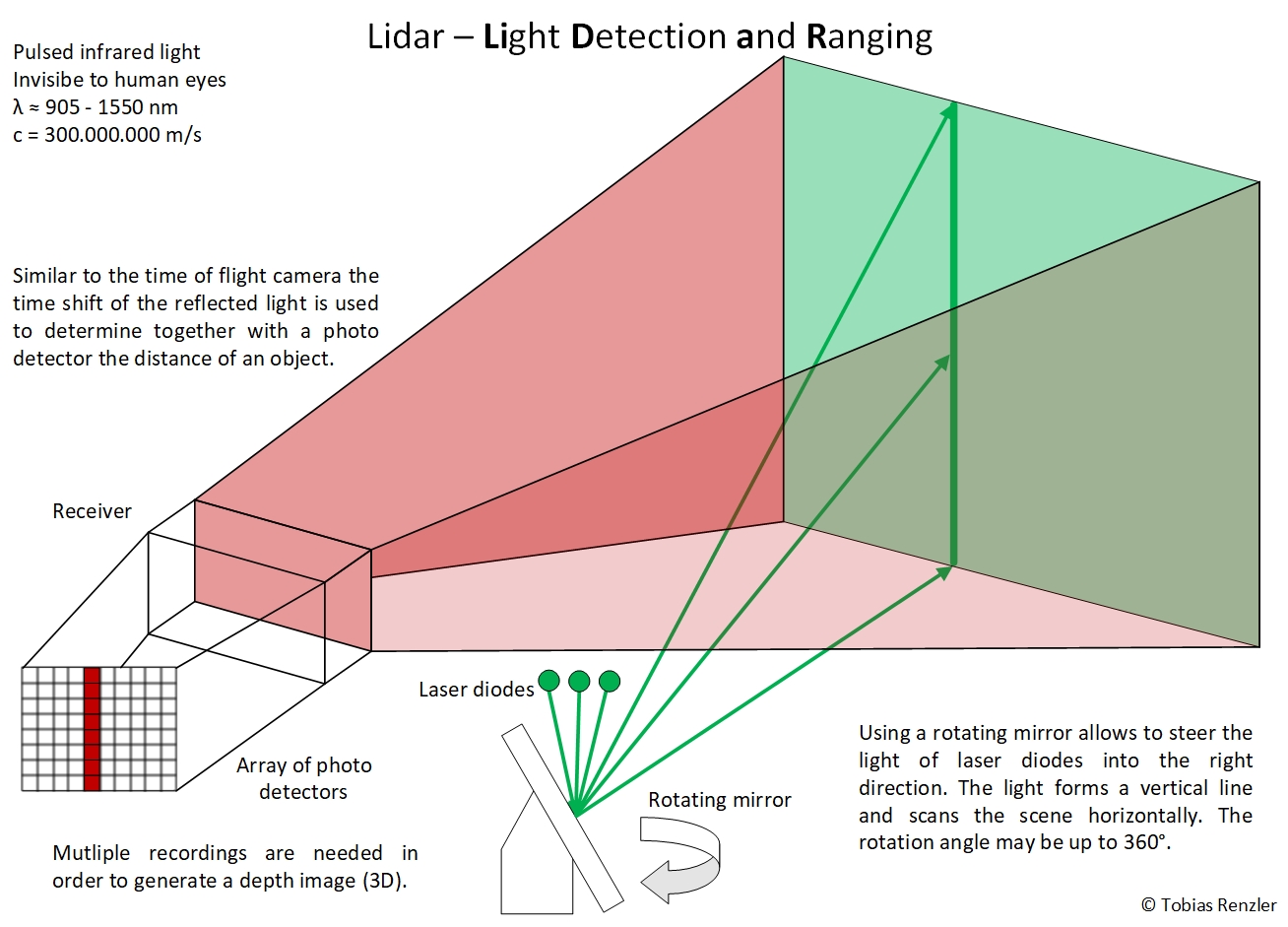

A Lidar sensor uses pulsed infrared light to determine the distance to objects. In the automotive industry mostly 1D scanning lidars are used: multiple beams of infrared light are aligned vertically. After a measurement, using a rotating mirror the beams are moved horizontically to the next position. Rotation speeds of 10 to 20 Hz are common, meaning every 50 to 100 ms an updated view of the environment is available.